Beyond Rigid Worlds: Representing and Interacting with Non-Rigid Objects Workshop

513 Yeongdong-daero, Gangnam District

Floor 4F, Room 403

9:30AM – 12:30PM

YouTube Channel

YouTube Livestream

Interact via Slido

Sign Up for Post-Workshop Dinner

The physical world is inherently non-rigid and dynamic. However, many modern robotic modeling and perception stacks assume rigid and static environments, limiting their robustness and generality in the real world. Non-rigid objects such as ropes, cloth, plants, and soft containers are common in daily life, and many environments, including sand, fluids, flexible structures, and dynamic scenes, exhibit deformability and history-dependency that challenges traditional assumptions in robotics.

This workshop comes at a pivotal moment: advances in foundation models, scalable data collection, differentiable physics, and 3D modeling and reconstruction create new opportunities to represent and interact in non-rigid, dynamic worlds. At the same time, real-world applications increasingly demand systems for handling soft, articulated, or granular dynamic objects. The workshop will convene researchers from robotics, computer vision, and machine learning to tackle shared challenges in perception, representation, and interaction in non-rigid worlds. By surfacing emerging solutions and promoting cross-disciplinary collaboration, the workshop aims to advance the development of more generalizable models grounded in data and physics for real-world robotic interaction.

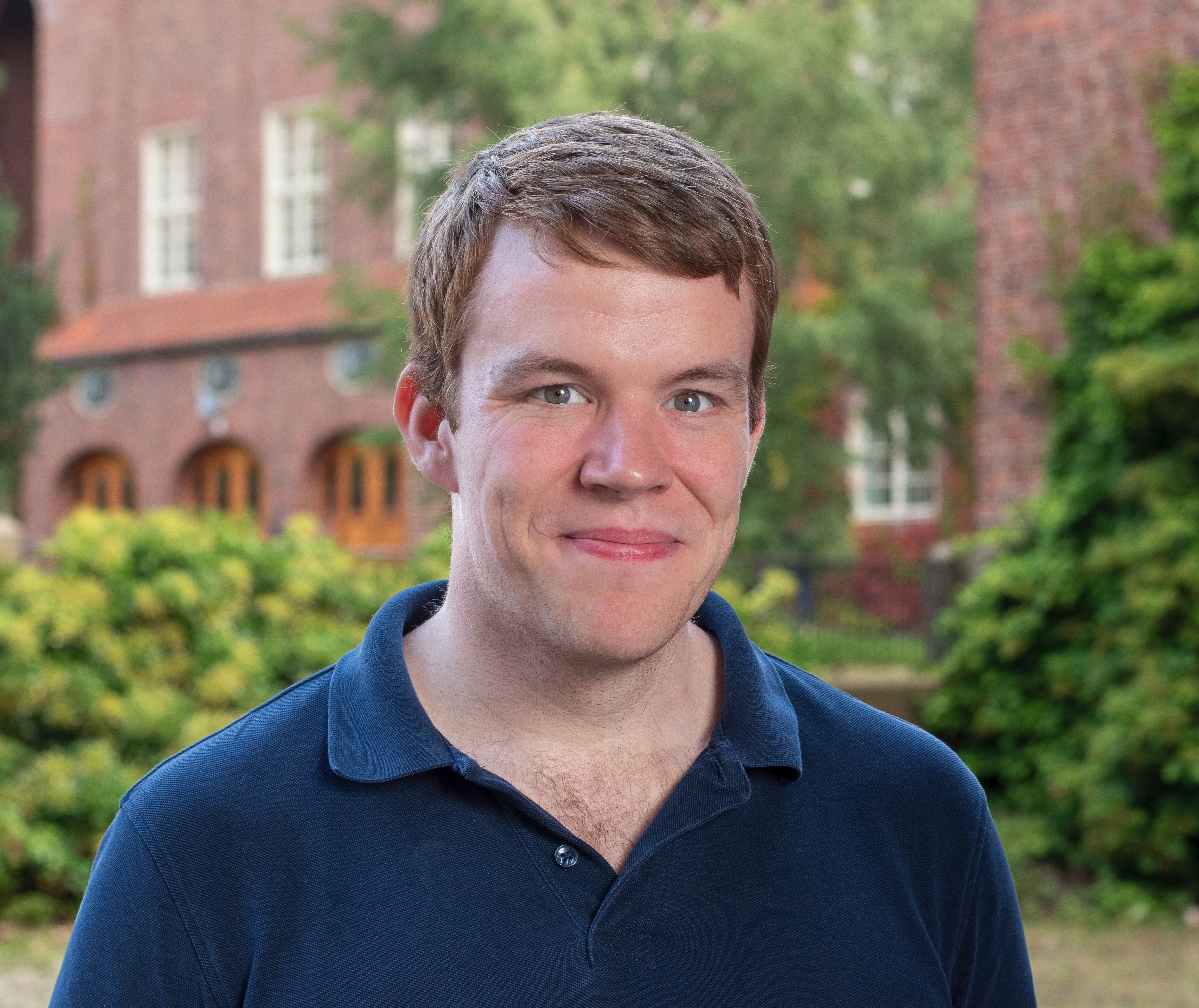

Short Bio: Jeannette Bohg is an Assistant Professor of Computer Science at Stanford

University. She was a group

leader at the Autonomous Motion Department (AMD) of the MPI for Intelligent Systems until September

2017. Before joining AMD in January 2012, Professor Bohg earned her Ph.D. at the Division of Robotics,

Perception and Learning (RPL) at KTH in Stockholm. In her thesis, she proposed novel methods towards

multi-modal scene understanding for robotic grasping. She also studied at Chalmers in Gothenburg and at

the Technical University in Dresden where she received her Master in Art and Technology and her Diploma

in Computer Science, respectively. Her research focuses on perception and learning for autonomous

robotic manipulation and grasping. She is specifically interested in developing methods that are

goal-directed, real-time and multi-modal such that they can provide meaningful feedback for execution

and learning. Professor Bohg has received several Early Career and Best Paper awards, most notably the

2019 IEEE Robotics and Automation Society Early Career Award and the 2020 Robotics: Science and Systems

Early Career Award.

Talk Title: Fine Sensorimotor Skills for Using Tools, Operating Devices, Assembling

Parts, and Manipulating Non-Rigid Objects

Short Bio: Yunzhu Li is an Assistant Professor of Computer Science at Columbia

University where he leads the Robotic Perception, Interaction, and Learning Lab (RoboPIL). Prior

to joining Columbia, he was an Assistant Professor in the Department of Computer Science at the University of

Illinois Urbana-Champaign. He completed a postdoctoral fellowship at the Stanford Vision and Learning Lab,

working with Fei-Fei Li and Jiajun Wu. He earned his Ph.D. from the Computer Science and Artificial Intelligence

Laboratory (CSAIL) at MIT, advised by Antonio Torralba and Russ Tedrake, and his bachelor’s degree from

Peking University in Beijing. Professor Li's work is distinguished by best paper awards at ICRA and CoRL

and research and innovation awards from Amazon and Sony.

Talk Title: Simulating and Manipulating Deformable Objects with Structured World Models

Short Bio: Siyuan Huang is a Research Scientist at the Beijing Institute for General

Artificial Intelligence (BIGAI), directing the Embodied Robotics Center and BIGAI-Unitree Joint Lab of

Embodied AI and Humanoid Robot. He received his Ph.D. from the Department of Statistics at the University

of California, Los Angeles (UCLA). His research aims to build a general agent capable of understanding and

interacting with 3D environments like humans. To achieve this, his work made contributions in (i) developing

scalable and hierarchical representations for 3D reconstruction and semantic grounding, (ii) modeling and

imitating human interactions with 3D world, and (iii) building robots proficient in interactions within the

3D world and with humans.

Talk Title: Learning to Build Interactable Replica of Articulated World

Short Bio: Steve Xie is the founder and CEO of Lightwheel, a leader in simulation and synthetic

data infrastructure for embodied AI. Lightwheel partners with organizations such as NVIDIA, Google DeepMind, Figure AI, and

Stanford University to accelerate robotics research and deployment. Steve previously led autonomous driving simulation at NVIDIA

and Cruise where he built large-scale synthetic data pipelines that set new benchmarks for realism and Sim2Real transfer.

He holds a B.S. in Physics from Peking University and a Ph.D. from Columbia University.

Talk Title: How Lightwheel Accelerates Non-Rigid Body Tasks with Simulation

| 9:30 - 9:35 | Introduction and Opening Remarks |

| 9:35 - 10:00 | Academic Perspective: Jeannette Bohg |

| 10:00 - 10:30 | Spotlight Session and Poster Overview |

| 10:30 - 11:00 | Coffee Break and Poster Session |

| 11:00 - 11:25 | Academic Perspective: Yunzhu Li |

| 11:25 - 11:35 | Industry Perspective: Steve Xie |

| 11:35 - 12:00 | Academic Perspective: Siyuan Huang |

| 12:00 - 12:30 | Panel Discussion |

|

A Unified Framework for Posing Strongly-Coupled Multiphysics for Robotics Simulation Jeong Hun Lee, Junzhe Hu, Sofia Kwok, Carmel Majidi, Zachary Manchester |

Paper | Video | Poster |

|

Mash, Spread, Slice! Learning to Manipulate Object States via Visual Spatial Progress Priyanka Mandikal, Jiaheng Hu, Shivin Dass, Sagnik Majumder, Roberto Martín-Martín*, Kristen Grauman* ⭐ Best Poster Award ⭐ |

Paper | Video | Poster |

|

JIGGLE: An Active Sensing Framework for Boundary Parameters Estimation in Deformable Surgical Environments Nikhil Uday Shinde*, Xiao Liang*, Fei Liu, Yutong Zhang, Florian Richter, Sylvia Lee Herbert, Michael C. Yip |

Paper | Video | Poster |

|

ViTacFormer: Learning Cross-Modal Representation for Visuo-Tactile Dexterous Manipulation Liang Heng*, Haoran Geng*, Kaifeng Zhang, Pieter Abbeel, Jitendra Malik |

Paper | Video | Poster |

|

Articulated Object Manipulation Using Online Axis Estimation with SAM2-Based Tracking Xi Wang*, Tianxing Chen*, Tianling Xu, Yiting Fu, Ziqi He, BingYu Yang, Qiangyu Chen, KailunSu |

Paper | Video | Poster |

|

Learning Equivariant Neural-Augmented Object Dynamics from Few Interactions Sergio Orozco, Brandon B. May, Tushar Kusnur, George Konidaris, Laura Herlant ⭐ Best Extended Abstract ⭐ |

Paper | Video | Poster |

|

Real-Time Tracking of Origami with Physics Simulator Considering Fold Lines Hiroto Arasaki, Akio Namiki |

Paper | Video | Poster |

We invite extended abstracts of up to 5 pages (excluding references, acknowledgments, limitations, and

appendix) formatted in the CoRL

template and submitted via the RINO OpenReview

console. The extended abstract should be submitted along with references, acknowledgments, limitations, and

appendices as one .pdf file. Supplementary videos, websites, or data are welcomed in the OpenReview submission but not

required and must maintain author identity and affiliation anonymity.

Best Paper and Best Poster Awards: The workshop will recognize outstanding contributions with a Best

Extended Abstract Award ($300) and a Best Poster Award ($200).

Submissions will be reviewed in a double-blind process by workshop organizers and attendees.

Each submission must nominate one author as a reviewer to evaluate one other contribution, following the CoRL main

track's reciprocal review model. Authors are strongly encouraged to incorporate the feedback received from the reviewers.

Accepted abstracts will be published on the workshop website, invited for spotlight presentations, and presented as posters.

We encourage submission of in-progress work and extensions of previously published material; originality is

welcome but not required.

However, workshop paper versions of papers accepted to the CoRL 2025 main track are not permitted. We

strongly encourage in-person participation of at least one author in the workshop.

The timezone for all deadlines is Anywhere on Earth

(AoE).

| Paper Submission Opens | Aug 1 |

| Paper Submission Deadline | |

| Review Period | Aug 25 - Sep 6 |

| Author Notification | Sep 8 |

| Camera-ready Deadline | Sep 17 |

| Spotlight Video Deadline | Sep 21 |

| Poster Deadline | Sep 23 |